Helping Institutions Towards APIs

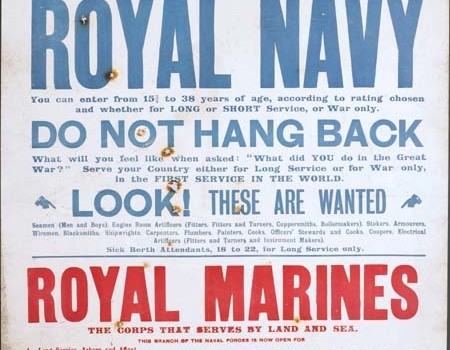

(Image: Wanted immediately for the Royal Navy, © Museum of London)

In this post, I’d like to go into some technical detail regarding point five in our strategy — helping institutions to set up their own APIs. I’ll talk about how we’ve set up our Solr, and document the configuration files we’ve used so that any of the institutions we have helped can recreate our work.

Solr Single Core and The Solr Schema File

We were running Tomcat already on our servers, so we set up our Solr to run out of that rather than setting up some other Java servlet container product. Our server is running Ubuntu 12.04.2 and we installed Solr the easy way (using apt) which installed v1.2 . A quick restart of both apache and tomcat, and Solr was ready to go, just waiting for configuration and data. Most of the steps from this point onwards were taken with support from the Solr wiki.

Configuration

The first step in setting up Solr is the schema file, which is under /usr/share/solr/conf on Ubuntu. The main purpose of this file is to tell Solr about the structure of the data which you will be loading into it. Both the Ubuntu install and the OSX install I used for testing came with a sample schema file from the Solr tutorial, and it is fairly straightforward to use this file as a template and just change sections for your data.

The first half of the file sets up the internal Solr data <types> you want to have available on your server, and so for the relatively simple datasets we were loading in there wasn’t any need to alter that section. Skipping to the <fields> section, we started off testing data from the Welsh Voices of the Great War project, and you can take a look at our example v1.2 schema files. The main data types in use for welsh voices data were strings (for short text fields), text (for paragraphs of text — note in Solr v1.4 this has become text_general), and ints.

After the main fields section, the file contains some extra fields which offer more functionality. In particular, there is a catchall text field which solr uses to combine all the text fields in a record and to perform default searching (if the user doesn’t specify a particular field to search on). It is not necessary to do anything to these fields here, as there is one further configuration section lower down the file which sets them up. after the regular fields, you will see dynamicFields which again do not need altering in our simple use case, and then after the <fields> section, you will see the uniqueKey, defaultSearchField and defaultOperator configuration entries, followed by copyfield entries. The copyfield entries allow you to specify which of your metadata fields it makes sense for default queries to search, and populate the catchall field mentioned above.

A lot of the testing I had done was with v1.4 on OSX and so when I tried to copy the solr schema I had worked out in testing up to our production server I got the relatively unhelpful Solr core dump page, so be warned, as a product Solr changes between releases and the schema files are not necessarily backwards compatible!

Data Import

Solr supports uploading your data from a variety of formats using import handlers. For all the sources we worked with, the relevant handler was the CSV import handler, (although the XML one stood out most obviously as also being of possible interest for other providers). CSV can be uploaded to a running instance of Solr using a utility such as cURL, and this is what we did, although you can also enable streaming from the server’s own filesystem. Don’t forget to include the commit option in the update otherwise you will be scratching your head as to where your data is!

Solr Multicore

The next step with our Solr configuration was adding in data from different providers. If we had been completely aggregating, then it might have made sense to put all the data into the same Solr instance, albeit with a particularly comprehensive schema file. Another alternative would be to run multiple Solr applications in Tomcat. However, Solr has another option — you can run it in multicore mode, where one running Solr is partitioned into separate cores, each one holding an isolated data set. Once you have converted Solr to multicore mode, you can add, remove, start and stop cores without even restarting the server, which made it a great solution for us to add in more providers as they came up.

Multicore mode is a little trickier to set up and requires you to edit the main Solr configuration file to set off. In terms of keeping track of what is happening, it is also slightly easier if you know from the beginning you are going to run multicore mode, and set your Solr up accordingly. However, if you do start in single core mode, knowing a little more about where Solr is saving your data will allow you to tweak the configuration files and preserve your single core as one of the new multiple cores.

You can take a look at our configuration files — in terms of placement, you need to specify for each core a name and a directory to store the data. Ubuntu Solr already has a suggested directory structure in place which is easy to use, or you can craft your own.

You can also access the cores directly and run your own queries against specific ones rather than using our API!

Welsh Voices of the Great War

British Library India Office

British Postal Museum

Provider Configuration

Once our cores were spun up, the rest was easy — each core has a separate configuration directory and separate schema files, so it was just a case of repeating the first step for each new provider. Our final schema files for the providers are given below!

Security

The last (but absolutely not least) thing to mention with setting up your own Solr instance is Security. There’s an interesting entry on the Solr wiki which basically notes that a running Solr, by default, can be completely administered over the web, including adding more data, deleting your data, etc. Solr as an app does not concern itself with reproducing security features which have been done better elsewhere, and so running your own instance will necessitate a degree of systems architecture depending on your use case. You can firewall your instance off to everything except your own servers, for example, which is great if you want to dump in a load of data you found somewhere and use Solr for your own (or your own institution’s) purposes, but not so great if your intent is to offer an API to the world. Or, you could control access to your Solr instance by running it behind your own proxy server which limits the HTTP requests people can send through. Another option is to make use of features of Tomcat (or similar) to add authentication control to the admin pages or even the search pages. On our running instance, after loading the data, I have simply disabled administrative features by commenting out the update and admin requestHandlers in the solrconfig.xml file for each core, as well as in the multicore solr.xml file.

Summary

Hopefully I have brought across how easy it is to drop Solr onto your tomcat server and get your API running in very little time. All the providers we worked with were able to supply metadata in CSV or XML format, and it just dropped straight into Solr and was searchable in very little time.

I’ve already talked a little about the strategy for our federated API performing mappings between various APIs in the field, in my last post, but in my next post I want to cover the specifics of these mappings, and tie off our technological approach.